In the Gen-AI web, the problem of trust will be central: brands will need to deploy verification technology, while consumers will need to develop new skills to help them navigate a Bond-esque web of deep fakes, scam ads and disinformation. Brands that learn lessons from web 2.0, and scenario plan around unintended consequences will be in a stronger position to build trust, says Lena Roland, head of content, WARC Strategy.

“We need a shift in mindset, from solving problems to preventing problems. We need to act so we don’t need to react. Prevent before we need to treat. Foresight instead of hindsight. […] A true technology evolution must be accompanied by the development of substantial, new, ethical infrastructures and policies. […] Because the internet without trust, is scandal.”

The above quote, from Conny Braams, then Unilever Chief Digital & Commercial Officer, speaking at World Federation of Advertisers Global Marketer Week in 2022, called for marketers to adopt a more proactive and imaginative mindset to ensure the next iteration of the web is a safe and trusted environment.

Building digital trust pays off. “Organizations that are best positioned to build digital trust are also more likely than others to see annual growth rates of at least 10% on their top and bottom lines,” claimed a 2022 study by McKinsey, which surveyed more than 1,300 business leaders and 3,000 consumers globally.

As the Gen-AI web plays out, here are four important considerations for marketers.

- Lessons from web 2.0

- Lessons from web 2.0

In November 2022 OpenAI released ChatGPT, a large language model that uses deep learning to generate human-like text and natural language processing to assess and answer questions. As a tool, it can aid with tasks such as writing articles and conducting research. Unlike previous iterations, this version has captured the imagination of businesses and the public alike, because its output is impressively human-like.

Generative AI (Gen-AI) systems including text-based tools like ChatGPT or Google’s Bard, or image-generating apps like DALL-E and Midjourney present multiple opportunities for marketers. These include the ability to kick-start ideas, test creative, or even create advertising campaigns. Soon, they even offer the possibility of hyper-personalisation of creative. Outside of creative work, it offers up a future in which mundane and inefficient tasks can be outsourced to AI, enabling companies to divert human talent to more important tasks.

Gen AI looks set to transform marketing, and the entire web, but at the same time, it also threatens to exacerbate the problems brands and consumers are grappling with in web 2.0 such as inadvertently monetising harmful content, data privacy violations, AI bias and ad fraud.

Lessons from web 2.0 suggest that a 'move fast and break things' approach combined with a lack of responsibility is a toxic mix. In the Gen-AI web companies that adopt a responsible and ethical approach to building and utilising Gen-AI tools will reduce harm, and help minimise risk to brands, and their customers.

- What to do about convincing fakery?

For all its promise, the reality is that Gen-AI tools are currently a brand (and consumer) safety headache.

Martin Lewis, a finance expert in the UK and founder of MoneySavingExpert.com, recently issued a warning after a deep fake video of him being used by scammers began to circulate online. The clip features Lewis discussing an investment opportunity backed by Elon Musk. In reality, neither Lewis nor Musk had endorsed the investment.

In the Gen-AI web it will be even harder for companies, regulators, citizens, and governments to beat the scammers and bad actors. For people, knowing what is real or not will become increasingly difficult. Some companies are building detection tools that can identify fake videos: Intel, for example, claims that its FakeCatcher product can detect fake videos with a 96% accuracy rate.

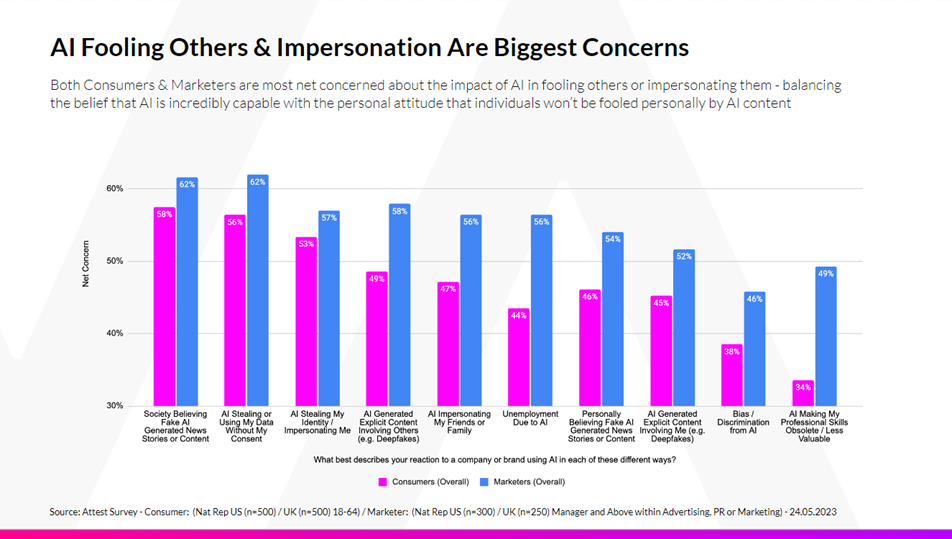

Research by Rival Spark conducted in June this year confirmed that marketers and consumers are concerned about fakery, with fake AI generated content and the impersonation and ownership of brand image or assets topping the list of marketers’ concerns.

“In an age where anything is increasingly possible and created with ease, as creativity is augmented with technology, decreasingly less can be held as true quickly. The ability to police personal likeness or brand assets is a shared challenge for both consumers and marketers”, notes Rival Spark’s co-founder, DuBose Cole.

As well as working with verification technology companies to help navigate the firehose of augmented creation and fakery, brands should also think like APIs. “Brands which use their identity to influence the models underpinning AI will have a better chance to maintain what they stand for in a sea of content generated about them,” said Cole.

A lack of clarity around copyright laws for Gen-AI content and images doesn’t help. It’s currently unclear if copyright, patent and trademark infringement apply to AI creations, a recent article in the HBR notes. It’s also unclear who, ultimately, owns Gen-AI creations.

In the absence of standards, agencies and brands that use Gen-AI tools risk copyright infringement. In a recent LinkedIn post Sara McCorquodale, CEO & Founder at CORQ, an influencer intelligence business, says creators are concerned that their work and image is being cloned in AI-generated ads. “The combination of AI, influencers and creative is a highly risky one for brands”, warns McCorquodale.

- Invest in premium media: It’s a barrier against “a hellfire of fake content"

Another brand and consumer safety headache is that Gen-AI looks set to generate a cesspit of inaccurate information, making it even harder for people to know what is true, or not.

While the answers created by a tool like ChatGPT can be incredibly impressive, various studies suggest that they often contain highly convincing looking inaccuracies. Princeton computer scientists Arvind Narayanan and Sayash Kapoor have referred to ChatGPT as a “bullshit generator”. As Narayanan explains, “it is trained to produce plausible text. It is very good at being persuasive, but it’s not trained to produce true statements.”

Research by NewsGuard, a for-profit organisation that rates the trustworthiness of news sites, found that AI-generated content farms are churning out unreliable junk content onto sites that are attracting advertising spend from over 140 major brands.

As a result, the onset of widely-available AI is likely to turbocharge the creation of MFAs (made for advertising) sites. For brands, this means it could become even harder to break the economic link between advertising and low-quality or harmful content.

As the next stage of the web unfolds, marketers need to carefully consider where they invest and how to reduce negative outcomes for their brands, and their consumers. “We need to be clear on what we are building and what we need to prevent – amongst all the hype – to make sure people don’t have an experience that is riddled with scams”, continued Connie Braams commentary at the WFA conference. Though she was speaking in the context of crypto and NFTs, her advice is also relevant to the roll out of Gen-AI.

General Mills, the US CPG company, is trying to mitigate these pitfalls by partnering with Zefr, a verification company, to measure misinformation on its social media accounts, per Ad Age. “We’re continually looking for ways to improve where our brands show up, and to ensure that we're showing up in environments that are not supportive of explicit content or negative or content,” said Lisa Roebuck, media director at General Mills.

Smart brands will flock to quality media, with human scrutiny, diverting spend away from low-quality, automated click-bait spaces.

Jim VandeHei, CEO and co-founder of Axios, highlights “eight transformations” for the future of media; he argues that the media companies that will survive – and thrive – in the AI-driven era will prioritise expertise and trust.

“AI will rain a hellfire of fake and doctored content on the world, starting now,” he writes. “That’ll push readers to seek safer and trusted sources of news — directly instead of through the side door of social media … advertisers will shift to safer, well-lit spaces, creating a healthy incentive for some publishers to get rid of the litter you see on their sites today.”

Unfortunately, this is likely to create a two-tiered internet and will further embed inequalities where access to quality content, facts and knowledge is a privilege that not everyone will be able to afford.

- Ethics, education and imagining unknown scenarios

Like all technology, AI is only as good or as bad as those who use it and how the next iteration of the web plays out remains to be seen. Some practitioners, including Oliver Feldwick, head of innovation at The&Partnership have called for a charter for ethical AI in advertising, to help avoid unintended consequences. Meanwhile, brands can build trust by educating customers about things like deep fakes, and the need to approach all online content with a critical eye.

Advertisers were caught out by the rise of programmatic advertising and the platform ecosystem, which advertisers ultimately fund, and brands are still dealing with the consequences today. As the Gen-AI web unfolds, it’s critical to address the known problems such as those outlined in this piece. It’s also critical to scenario plan around the future of the web, and what the brand’s role in that might be. This means imagining the ‘unknown unknowns’ scenarios, in other words, the potential unintended consequences, or risk being caught out again.